1. Juni 2018

Analysing Strava activities using Colab, Pandas & Matplotlib (Part 2)

How do you analyse Strava activities—such as runs or bike rides—with Colab, Python, Pandas, and Matplotlib? In my second article on this topic, I am demonstrating how to group and aggregate the data in many different ways.

How do you analyse Strava activities—such as runs or bike rides—with Colab, Python, Pandas, and Matplotlib? In my second article on this topic, I am demonstrating how to group and aggregate the data in many different ways.

My previous post in this series left most initial research questions open:

- Do I run longer distances on the weekend than during the week?

- Do I run faster on the weekend than during the week?

- How many days do I rest compared to days I run?

- How many kilometers did I cover during run-commutes?

- Which month of the year do I run the fastest?

- Which month of the year do I run the longest distance?

- Which quarter of the year do I run the fastest?

- Which quarter of the year do I run the longest distance?

- How has the average distance per run changed over the years?

- How has the average time per run changed over the years?

- How has my average speed per run changed over the years?

- How much distance did I run in races and how much during training?

- and so on and so on…

Where the previous article left off

In the previous article, we have gotten as far as creating a Pandas data frame containing the individual Strava activities as rows, indexed by both date and type, and showing the respective distance covered during the activity (in km), and the duration of the activity.

In this article, I have added an additional column showing whether the activity was replacing a commute by other means of transport. Also I added a column that counts the activities per row: this count is trivially 1 here because every row corresponds to exactly one activity at the current aggregation level. This column isn’t strictly necessary but you will see later how it turns out to be useful.

distance elapsed_time commute count date type 2015-05-16 06:56:53 Hike 50km 680min 0 1 2015-05-25 08:16:03 Ride 35km 166min 0 1 2016-08-25 06:50:34 Ride 5km 23min 0 1 2017-02-01 07:42:11 Run 10km 57min 0 1 2018-05-15 06:42:09 Ride 8km 45min 1 1

Caveats

- I discovered what appears to be a known bug in Pandas here, where summing across booleans (i.e. counting true values) does not work as expected: https://github.com/pandas-dev/pandas/issues/7001. This is why I chose to use integers (0 or 1) instead of booleans to mark whether an individual activity is a commute.

- I have not yet finished cleaning the data. For example, I found that I did not stop a recording for a particular activity, and hence the elapsed time was 32 hours. This distorts the data. I started cleaning up my recordings, but Strava only allow me to export my activities once per week so the data here is inaccurate in places. You shouldn’t really care, but it illustrates that data analysis really starts with understanding how the data is collected.

Download Colab notebook

You can find the Colab notebook which I used for this article here:

File: Analysing_Strava_activities_using_Colab,_Pandas_and_Matplotlib_(Part_2).ipynb [14.72 kB]

Category:

Download: 1287

You can open this Colab notebook using Go to File>Upload Notebook… in Colab.

Looking more at individual activities

For each activity type, what were the top three activities in which I covered the longest distance?

print( activities['distance'] .groupby('type').nlargest(3) .to_string(float_format='%.1fkm'))

This prints:

type date type

Hike 2015-05-16 06:56:53 Hike 49.8km

2015-05-02 08:21:51 Hike 49.5km

2016-06-18 06:38:00 Hike 39.5km

IceSkate 2015-12-31 09:07:23 IceSkate 55.9km

2015-12-30 09:31:07 IceSkate 51.4km

2015-12-28 09:06:35 IceSkate 38.4km

Ride 2015-05-23 08:17:36 Ride 123.5km

2015-06-09 08:14:30 Ride 110.4km

2015-05-24 07:59:17 Ride 105.7km

Run 2016-11-26 08:00:00 Run 80.5km

2017-10-07 08:01:57 Run 77.6km

2017-01-14 08:40:00 Run 70.0km For each activity type, what were the top three activities on which I spent the most time?

print( (activities['elapsed_time'] .groupby('type').nlargest(4) / 60) .to_string(float_format='%.1fh'))

This prints:

type date type

Hike 2015-05-02 08:21:51 Hike 12.1h

2015-05-16 06:56:53 Hike 11.3h

2016-06-18 06:38:00 Hike 8.9h

IceSkate 2015-12-31 09:07:23 IceSkate 5.6h

2015-12-30 09:31:07 IceSkate 4.7h

2015-12-28 09:06:35 IceSkate 4.7h

Ride 2015-06-10 07:50:46 Ride 9.6h

2015-06-09 08:14:30 Ride 9.5h

2015-05-24 07:59:17 Ride 9.3h

Run 2017-10-07 08:01:57 Run 17.8h

2016-11-26 08:00:00 Run 11.1h

2017-05-01 07:21:44 Run 7.7h Grouping and aggregation is key

We have already seen that Pandas allows us to fairly easily aggregate this tabular data. However, Pandas does not only support slicing, dicing, and summing across the data frame, but also more sophisticated operations to group and aggregate the data. We shall look at them now as these grouping and aggregation operations will enable us to quickly answer above questions, and also help us visualising the data (visualization will be covered in a follow up article).

The key here is that many of the above questions do not need data showing individual activities. Instead they require an aggregation by day. Also, in order to compute e.g. the average distance run on Sundays, we need to know how many Sundays there were in the time period under consideration. Let’s look how we can address both requirements with Pandas.

Creating a summary for every day and every activity type

Let’s start with aggregating the activities by day:

activities_by_day_type = ( activities .groupby([pd.Grouper(freq='D', level=0), 'type']).sum())

In this case, summing makes sense for all columns: if there was more than one activity per day, for each row we will get the total distance, the total duration, and the number of commutes for all activities during that day. This is how a few rows from the data frame activities_by_day look right now:

distance elapsed_time count

date type

2016-09-18 Run 28km 183min 1

2016-09-19 Ride 6km 30min 1

2016-09-20 Ride 9km 36min 2

2016-09-21 Ride 16km 85min 4

2016-09-22 Ride 12km 51min 2

Run 5km 26min 1

2016-09-26 Ride 7km 52min 1

2016-09-27 Ride 10km 46min 2 Now we have the total distance, the total duration, and the total count by day and by type. This is where the little trick with adding the “count=1” column to the original data frame pays out: we get the number of activities that were aggregated into a single row. There are other ways to get such a count, but this is a straight-forward one. We were able to use the pd.Grouper here because the date column is a so-called DateTimeIndex—Pandas has a lot of support for manipulating time, and it is very important once you have data that involves a time dimension.

From sparsely indexed to densely indexed data frame

The first requirement is satisfied: we have activities aggregated by day and by type. But the representation is sparse, i.e. we do not have data for every day and every activity. This is a problem if we e.g. try to calculate the average distance run per day because the number of rows does not correspond to the number of all days. There are at least two solutions: the first solution is to calculate the average by summing and then divide by the separately calculated number of all days. The second solution—which takes full advantage of Pandas and allows us to leverage its capabilities to compute various statistics—is to transform the sparsely indexed data frame into a densely indexed data frame such that there is a data point for each day and each activity. We will take the second route here:

activities_by_day_type = ( activities_by_day_type .reset_index(level='type') .to_period('D') .reset_index() .set_index(['date', 'type']))

There might be an easier solution but in the above code snippet, we convert the Pandas DateTimeIndex to a Pandas PeriodIndex, which is more suitable for regular periods of time. We achieve this in steps: (1) we turn the type level of the index into a column such that the resulting data frame is indexed only by date, (2) we convert the date index into a periodic index (PeriodIndex as opposed to DateTimeIndex) such that we can merge the existing sparse index with the dense index that we will construct later on, (3) we set the data frame to use the (periodic) date and type as index.

As the next step in converting our sparsely indexed data frame into a densely indexed data frame, we first construct a dense index by constructing the Cartesian product of all days and all types. Second,—this is a very powerful method to remember—we call the method reindex, which indexes the existing data using the new (dense) index; we fill in missing values in all columns with zeros which makes sense here.

first_day = activities_by_day_type.index.levels[0].min() last_day = activities_by_day_type.index.levels[0].max() days = pd.period_range(first_day, last_day, freq='D') types = activities_by_day_type.index.levels[1].unique() index = pd.MultiIndex.from_product([days, types], names=['day', 'type']) activities_by_day_type = activities_by_day_type.reindex(index, fill_value=0)

Let’s look at a few continuous rows in activities_by_day:

distance elapsed_time commute count

day type

2018-05-24 Hike 0km 0min 0 0

IceSkate 0km 0min 0 0

Ride 0km 0min 0 0

Run 0km 0min 0 0

2018-05-25 Hike 0km 0min 0 0

IceSkate 0km 0min 0 0

Ride 0km 0min 0 0

Run 0km 0min 0 0

2018-05-26 Hike 0km 0min 0 0

IceSkate 0km 0min 0 0

Ride 0km 0min 0 0

Run 15km 95min 0 1 This is boring. Now most of the data frame consists of zeros because I don’t go ice-skating in summer and generally am not doing sports every day. But you will find out that for doing statistics, we are now in the perfect position.

Answering some research questions

For convenience, let’s define two data frames that aggregate away either the activity type or the day:

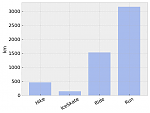

activities_by_day = activities_by_day_type.groupby('day').sum() activities_by_type = activities_by_day_type.groupby('type').sum()

Let’s finally go on answering some questions.

print( activities_by_day[['distance', 'elapsed_time']] .mean() .to_frame('mean') .T .to_string(formatters=detailed_formatters))

Note that activities_by_day[['distance', 'elapsed_time']].mean() would be sufficient in Colab but since I want to include some of the output verbatim in this post, I apply some formatting.

distance elapsed_time mean 3.7km 27.1min

Apparently I cover about 3.7km and 27min per day on average—during activities I recorded.

Asking questions about months, quarters, and years

With Pandas, we can also group and aggregate data to other periods of time. For example, let’s find the day/month/quarter/year for each activity in which I covered the longest distance, and do the same for the number of activities.

frequencies = ['D', 'M', 'Q', 'Y'] for f in frequencies: extremes_by_type = ( activities_by_day_type[['distance', 'count']] .unstack('type') .groupby(pd.Grouper(freq=f)).sum() .agg(['idxmax', 'max'])) extremes_by_type.index = ['month', 'max'] print( extremes_by_type.T .unstack(level=0) .swaplevel(axis=1) .sort_index(axis=1)) print('freq=%s\n' % f)

With the above code snippet, I want to demonstrate that it is possible to compute the maximum (and arg-max) for both count and distance simultaneously while aggregating over different time periods. This is powerful, and now we see why it can make sense to go to the length of getting the data into a Pandas data frame with the right shape.

count distance

D max D max

Hike 2015-01-18 1 2015-05-16 49.7859

IceSkate 2015-12-28 1 2015-12-31 55.9111

Ride 2016-09-21 4 2015-05-23 123.456

Run 2014-07-07 2 2016-11-26 80.4672

freq=D count distance

M max M max

Hike 2015-05 4 2015-05 140.274

IceSkate 2015-12 3 2015-12 145.714

Ride 2016-09 28 2015-06 463.587

Run 2014-07 18 2017-04 217.157

freq=M count distance

Q max Q max

Hike 2015Q1 5 2015Q2 140.274

IceSkate 2015Q4 3 2015Q4 145.714

Ride 2016Q3 39 2015Q2 799.87

Run 2014Q3 31 2017Q2 364.873

freq=Q count distance

Y max Y max

Hike 2015 12 2015 375.507

IceSkate 2015 3 2015 145.714

Ride 2016 55 2015 799.87

Run 2015 72 2016 953.036

freq=Y Supplying our own grouping criteria

With Pandas, you are not limited in how you want to group rows in a data frame. You can supply your own criteria. All you need is to provide a function that produces the key by which the rows should be grouped. I will take advantage of this and find out on which weekdays I cover the longest distance, spend the most time, or the most activities on average. This means aggregating the data for all Mondays together, and then for all Tuesdays, and so on.

weekday_abbr = 'Mon Tue Wed Thu Fri Sat Sun'.split() print( activities_by_day_type[['distance', 'elapsed_time', 'count']] .unstack('type') .groupby(lambda d: weekday_abbr[d.weekday]).mean() .agg(['idxmax', 'max']) .T.unstack(level=0) .swaplevel(axis=1).sort_index(axis=1))

I love hiking on Saturdays and running on Sundays:

count distance elapsed_time

idxmax max idxmax max idxmax max

Hike Sat 0.0390244 Sat 1.34283 Sat 18.7476

IceSkate Mon 0.00487805 Thu 0.272737 Thu 1.62561

Ride Thu 0.117073 Sun 1.6969 Sun 7.98554

Run Sun 0.313725 Sun 5.47772 Sat 48.3502 We can do the same for the months of the year:

months = 'Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec'.split() print( activities_by_day_type[['distance', 'elapsed_time', 'count']] .unstack('type') .groupby(pd.Grouper(freq='M')).sum() .groupby(lambda m: months[m.month-1]).mean() .agg(['idxmax', 'max']) .T.unstack(level=0) .swaplevel(axis=1).sort_index(axis=1))

April is the month of the year in which I do most of my running on average:

count distance elapsed_time

idxmax max idxmax max idxmax max

Hike May 1.25 May 37.9574 May 557.663

IceSkate Dec 0.75 Dec 36.4286 Dec 224.733

Ride Sep 7 Jun 126.46 Jun 759.654

Run Apr 9 Apr 122.326 Aug 1051.24 Of course, we can repeat the same analysis for the quarters of the year:

print( activities_by_day_type[['distance', 'elapsed_time', 'count']] .unstack('type') .groupby(pd.Grouper(freq='Q')).sum() .groupby(lambda d: "Q%d" % d.quarter).mean() .agg(['idxmax', 'max']) .T.unstack(level=0) .swaplevel(axis=1).sort_index(axis=1))

This prints:

count distance elapsed_time

idxmax max idxmax max idxmax max

Hike Q2 1.6 Q2 43.1889 Q2 620.307

IceSkate Q4 0.75 Q4 36.4286 Q4 224.733

Ride Q3 9.75 Q2 187.602 Q2 1016.1

Run Q1 18.75 Q1 234.984 Q3 1723.74 A few notes

I am still learning how to use Pandas efficiently. So while the above code is effectively achieving what I want, there might be far more elegant and idiomatic solutions around. At the end, when using Pandas for interactive data analysis, it is also a trade-off between exploitation and exploration: getting the job done counts, and sometimes the stupid solution that will certainly get you to your goal is the preferred one. On the longer term, exploring new ways on how to leverage Pandas library better is paying out though and this has been one of my motivations to share these articles with you.

Conclusion

In this article, we have seen the various ways of using groupby and agg for grouping and aggregation. We have also seen how useful it is to invest a tiny bit at the beginning to make sure that the time index has the right data type such that further manipulation becomes much easier. While I haven’t explained any of it, we have also seen the use of unstack, swaplevel, and sort_index to rearrange the data in the data frame, thereby seemlessly moving & swapping levels between index and columns.

I hope that you were inspired by this article. In the next article in this series, we will tackle visualization (using Matplotlib) with the particular goal to reveal trends in the data which might not be as evident from summary tables as we computed here. Stay tuned.

Read the next article in this series